How to Connect Foundation Models to your Enterprise Data sources using Amazon BedRock’s Knowledge base

By Purush Das • March 15, 2024

In this blog, we will explore how to connect Foundation Models to your enterprise data sources using Amazon Bedrock’s Knowledge base.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

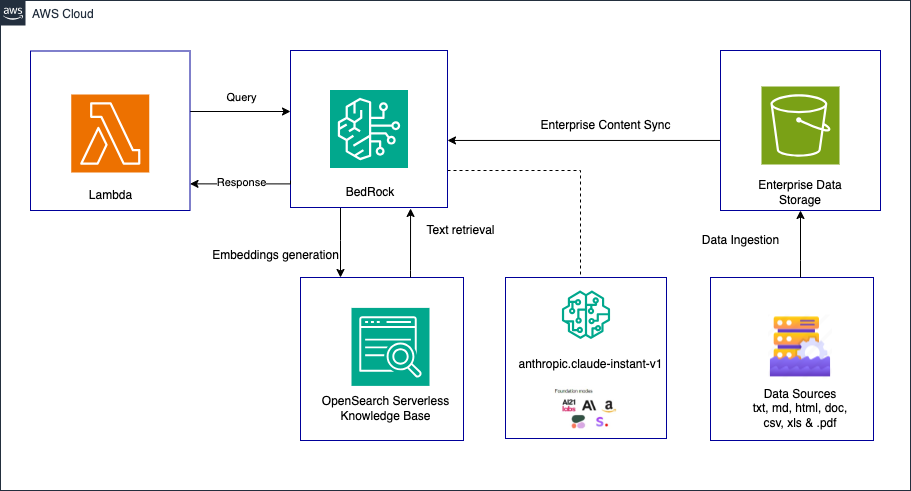

With Knowledge Bases for Amazon Bedrock, you can give FMs and agents contextual information from your enterprise’s data sources for Retrieval Augmented Generation (RAG) to deliver more relevant, accurate, and customized responses.

To equip FMs with up-to-date and proprietary information, organizations use Retrieval Augmented Generation (RAG), a technique that fetches data from company data sources and enriches the prompt to provide more relevant and accurate responses. Knowledge Bases for Amazon Bedrock is a fully managed capability that helps you implement the entire RAG workflow from ingestion to retrieval and prompt augmentation without having to build custom integrations to data sources and manage data flows. Session context management is built in, so your app can readily support multi-turn conversations.

Architecture

Pre-requisites

- AWS account

- IRS PDF files

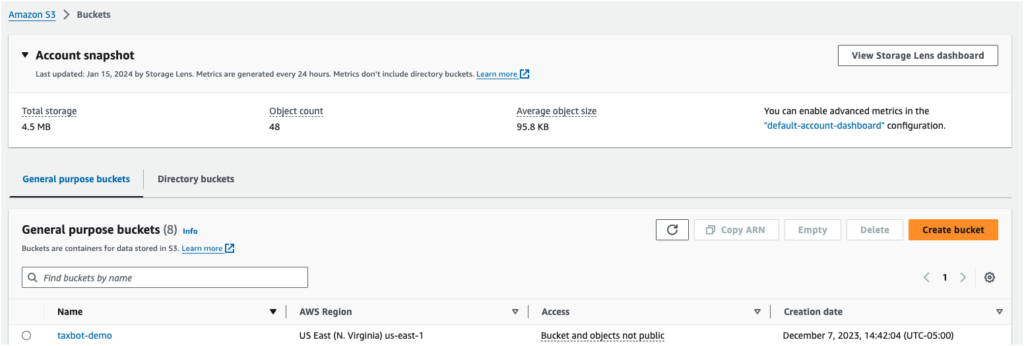

Create S3 bucket

Create a S3 bucket for your Data Source

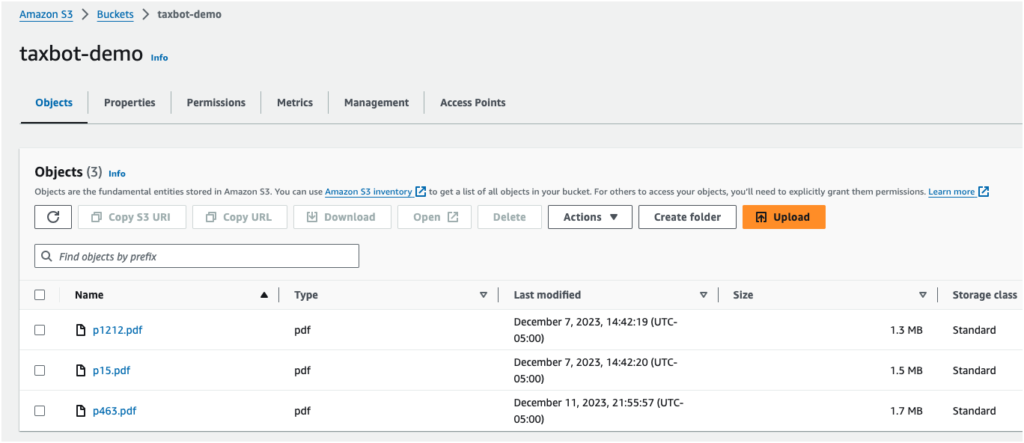

Inject your enterprise data into the s3 bucket

- Upload the IRS tax pdf files into the s3 bucket.

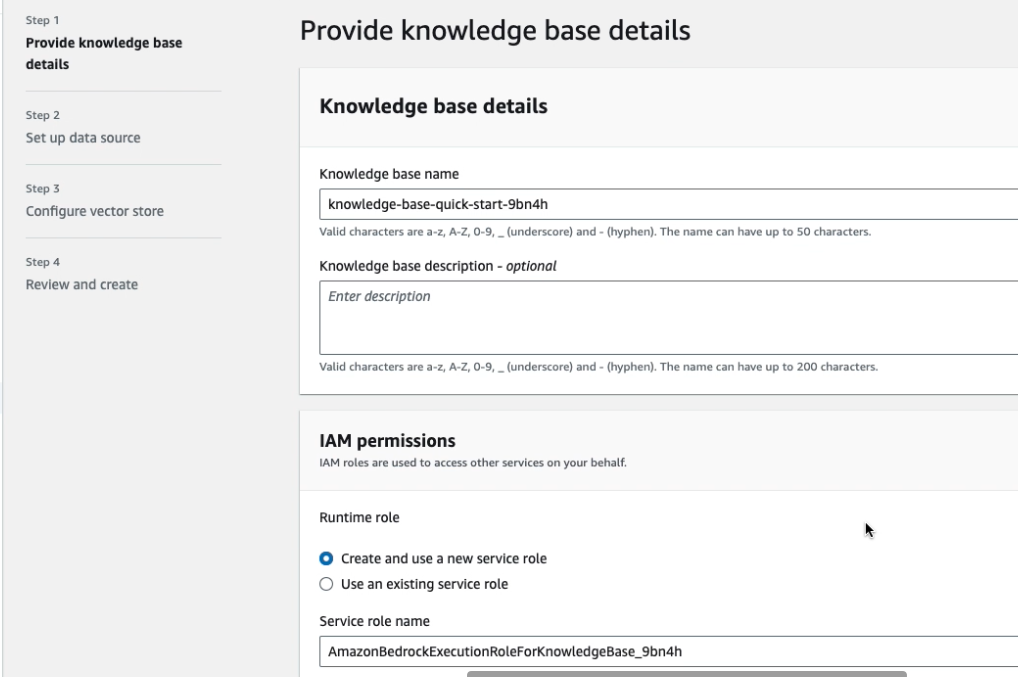

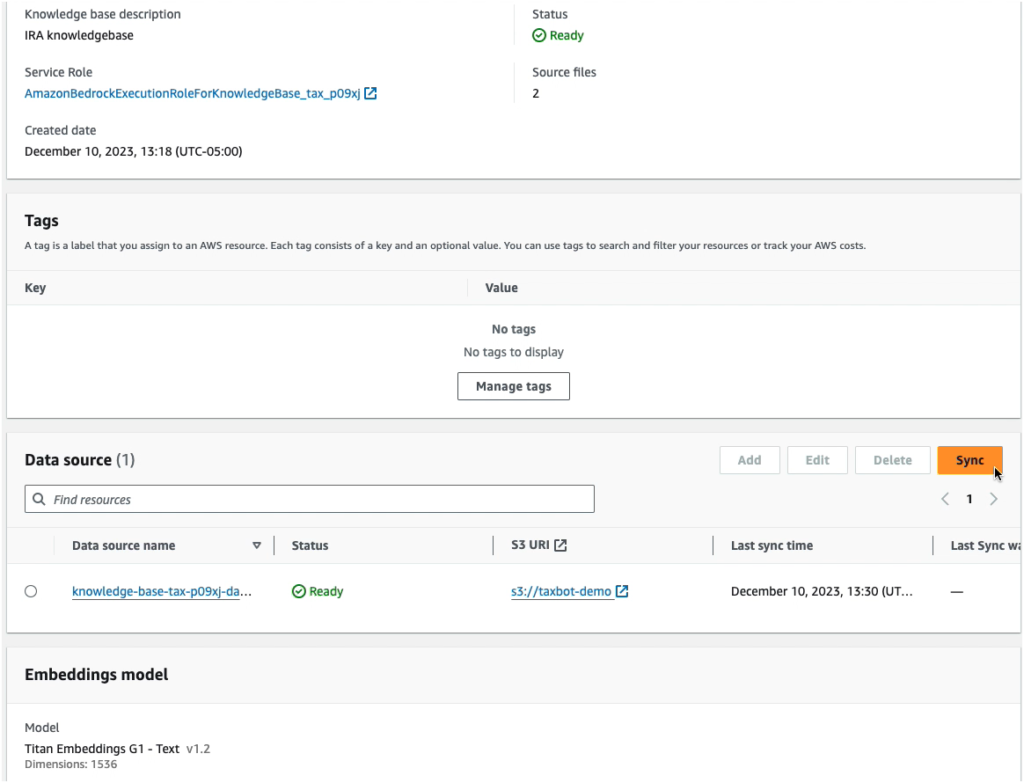

Create a Bedrock Knowledge base

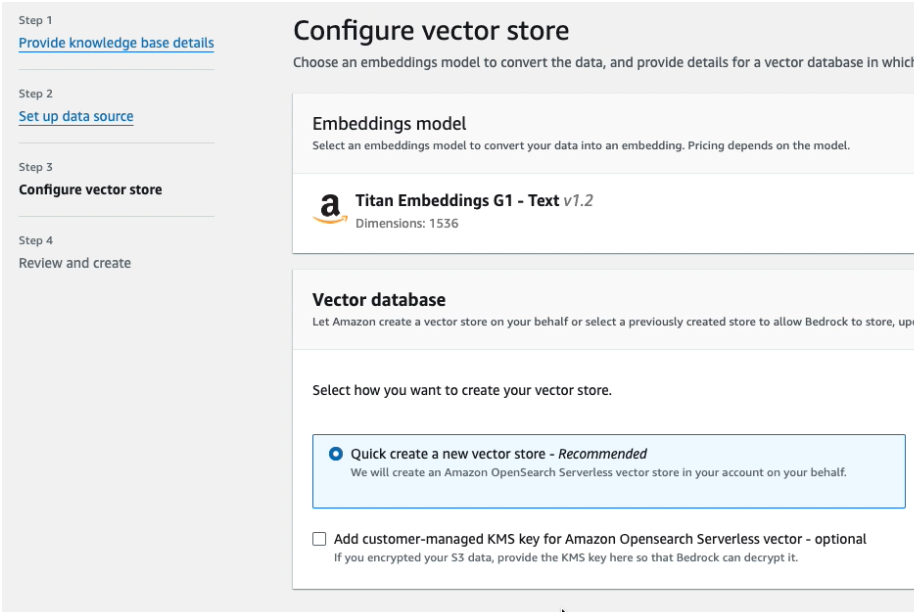

- Create a Bedrock Knowledge Base using OpenSearch serverless

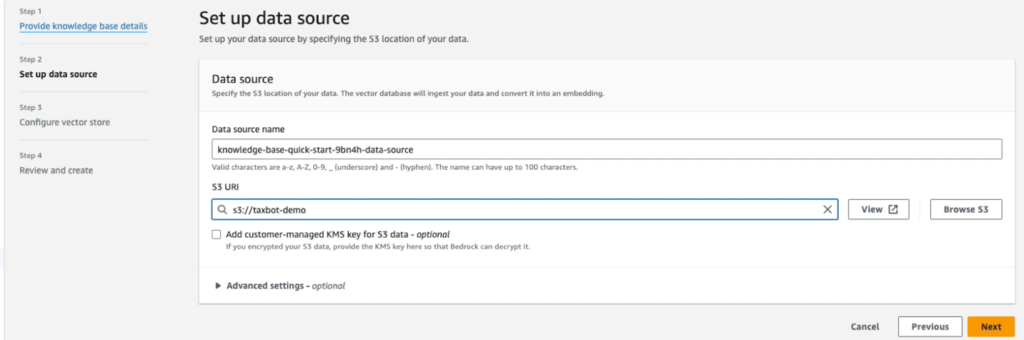

- Set up data source by selecting the S3 bucket

- Configure vector store.

This will create an Amazon OpenSearch Serverless vector store in your account on your behalf.

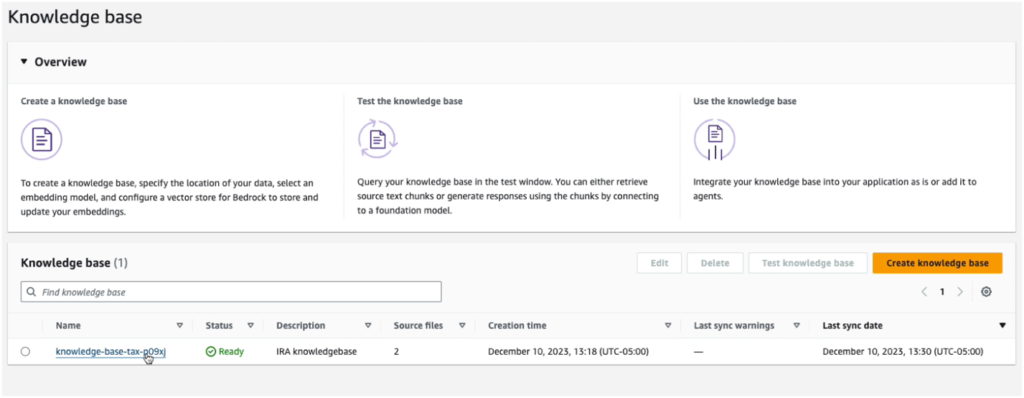

- Create Knowledge base

- Sync Knowledge base

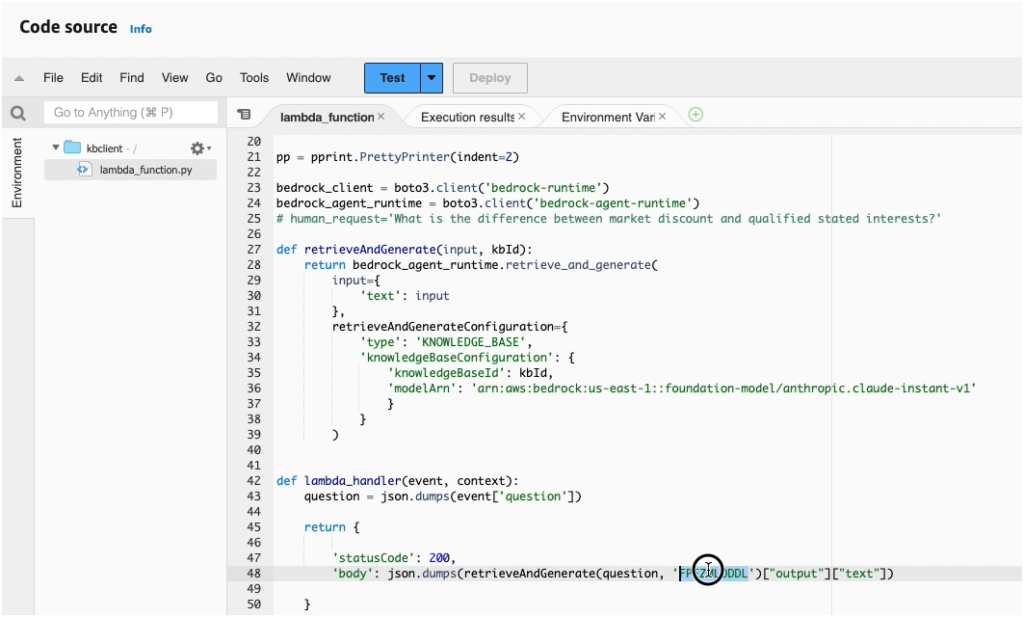

Create a Lambda

Create a Lambda function that calls the Knowledge Base via Bedrock APIs using the boto3 client.

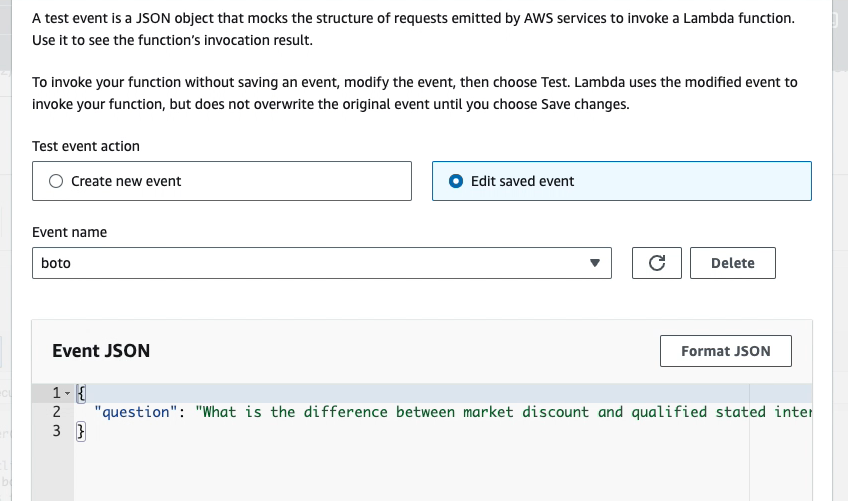

Test Lambda Function

- Configure test event with JSON request that contains IRS Question

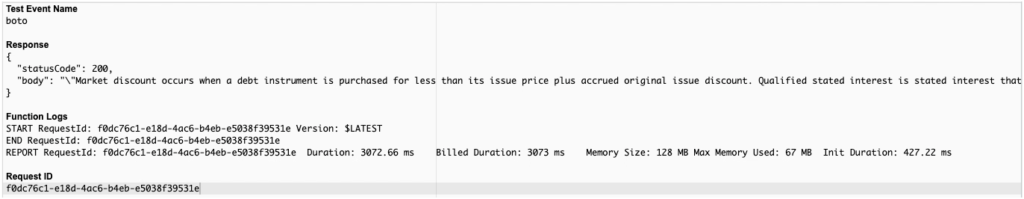

- Run the Lambda Function and verify the expected response

In this blog, we explored how to use Amazon Bedrock Knowledge base, OpenSearch Serverless, S3, and Lambda to implement Retrieval-Augmented Generation (RAG) that can be used via API.

Note: Delete the resources if you have created related to this blog so that you are not being charged unnecessarily.