How to Retrieval Augmented Generation using Pinecone as a Knowledge Base for Amazon Bedrock

By Purush Das • March 17, 2024

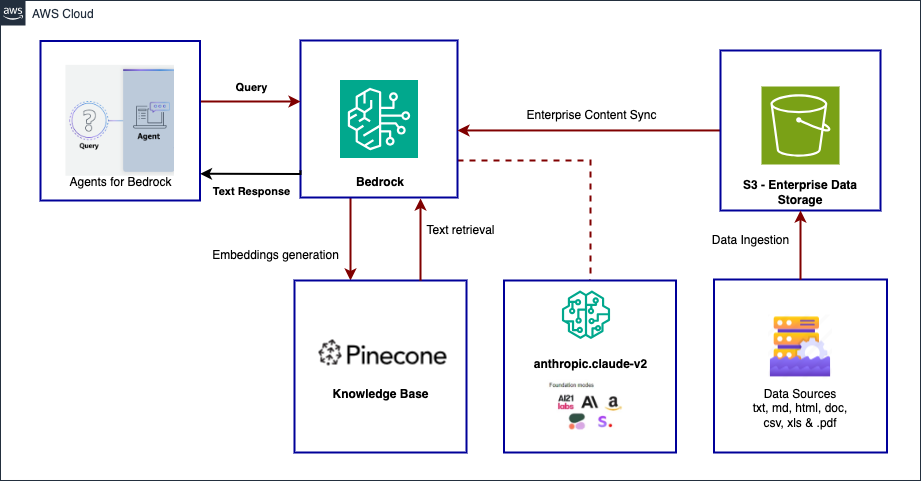

In this blog, we will explore how to use Pinecone as a knowledge base for Amazon Bedrock for building GenAI applications and test using Bedrock Agent.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon via a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

Pinecone makes it easy to provide long-term memory for high-performance AI applications. It’s a managed, cloud-native vector database with a simple API and no infrastructure hassles. Pinecone serves fresh, filtered query results with low latency at the scale of billions of vectors.

With Knowledge Bases for Amazon Bedrock, you can give FMs and agents contextual information from your enterprise’s data sources for Retrieval Augmented Generation (RAG) to deliver more relevant, accurate, and customized responses.

In Bedrock, users interact with Agents that are capable of combining the natural language interface of the supported LLMs with those of a Knowledge Base. Bedrock’s Knowledge Base feature uses the supported LLMs to generate embeddings from the original data source. These embeddings are stored in Pinecone, and the Pinecone index is used to retrieve semantically relevant content upon the user’s query to the Agent.

Architecture

Pre-requisites

- AWS account

- Pinecone accounts and apikey

- Pinecone index

- IRS PDF files

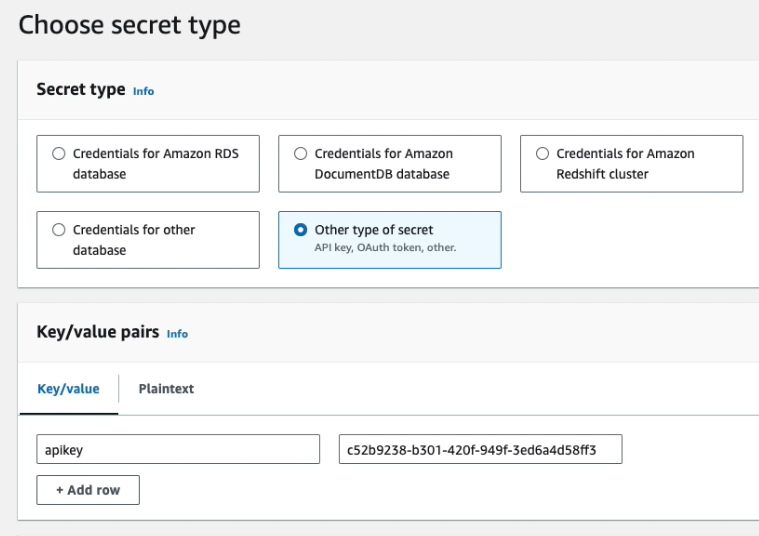

Configure Pinecone secrets in AWS’s Secrets Manager

- Create a new Key/value pair with the key apiKey and then paste your Pinecone API key as it’s corresponding value.

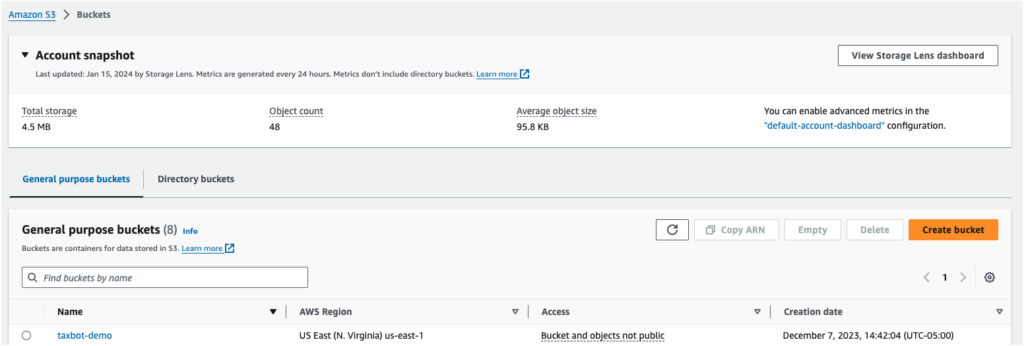

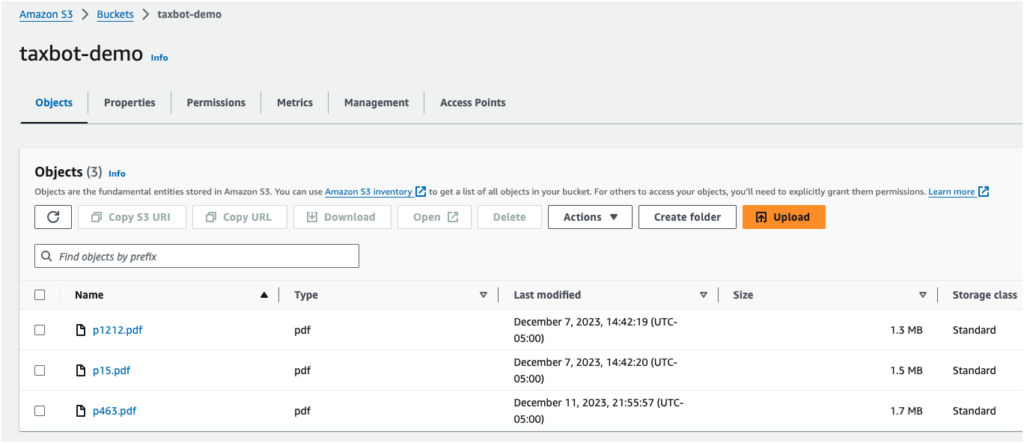

Create S3 bucket

Create S3 bucket for your Data Source

Inject your enterprise data into the s3 bucket

- Upload the IRS tax pdf files into the s3 bucket.

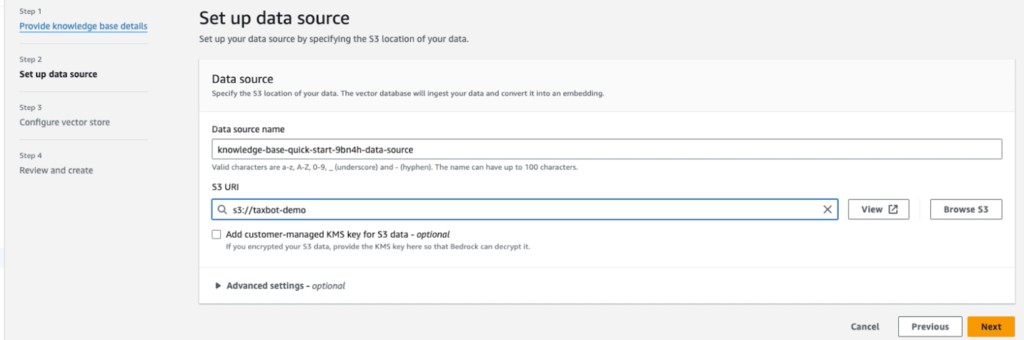

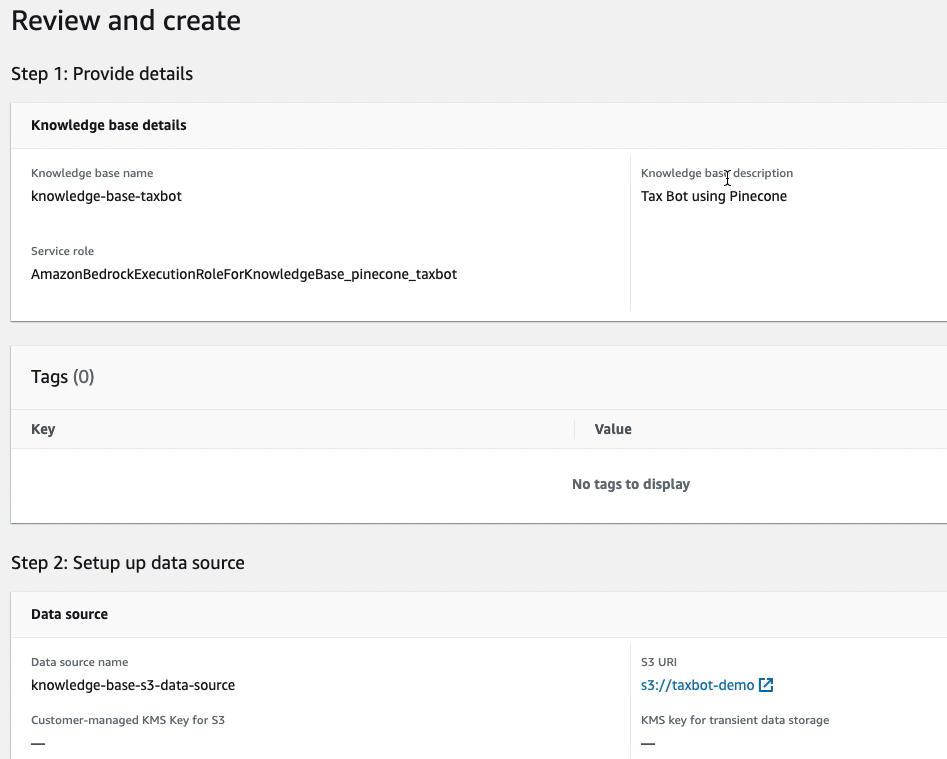

Create a Bedrock Knowledge Base

- Create a Bedrock Knowledge Base using Pinecone

- Set up data source by selecting the S3 bucket created earlier

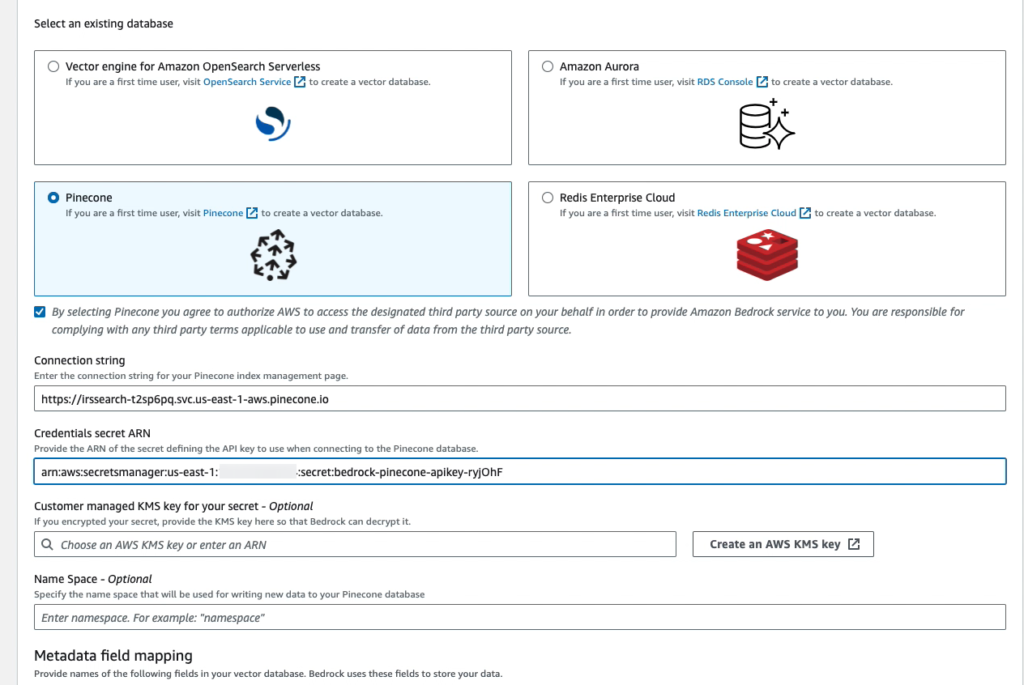

- Configure vector store by selecting Pinecone

- Connection String: Provide the Pinecone index endpoint retrieved from the Pinecone

- Credential Secret ARN: Provide the secret ARN you created

This will create an Pinecone Serverless vector store in your account on your behalf.

- Review and Create Knowledge base

- Sync Knowledge base

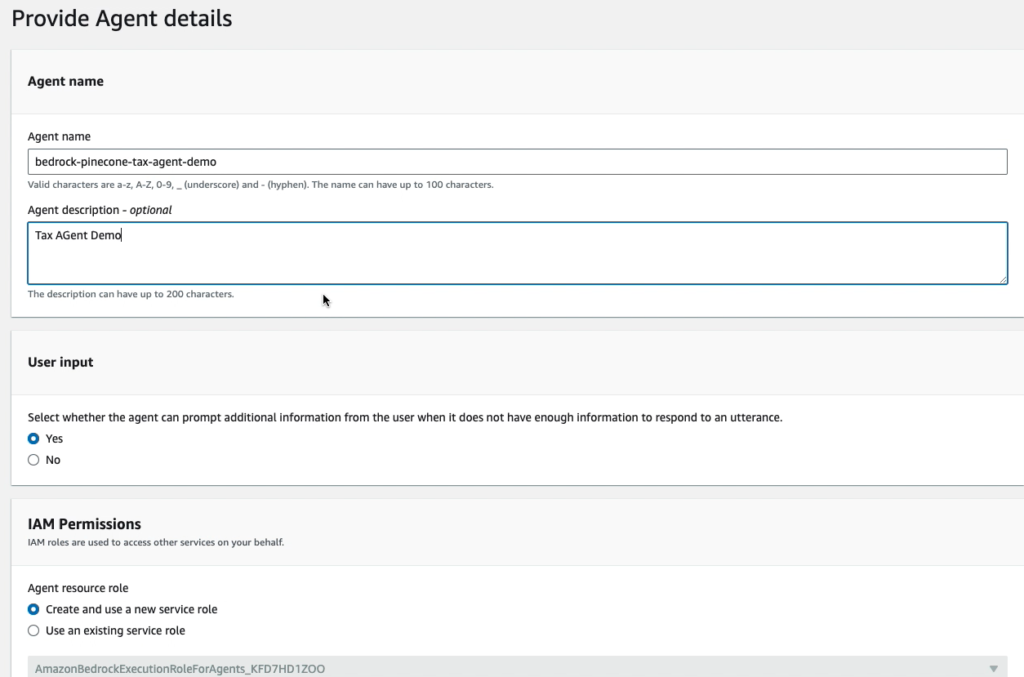

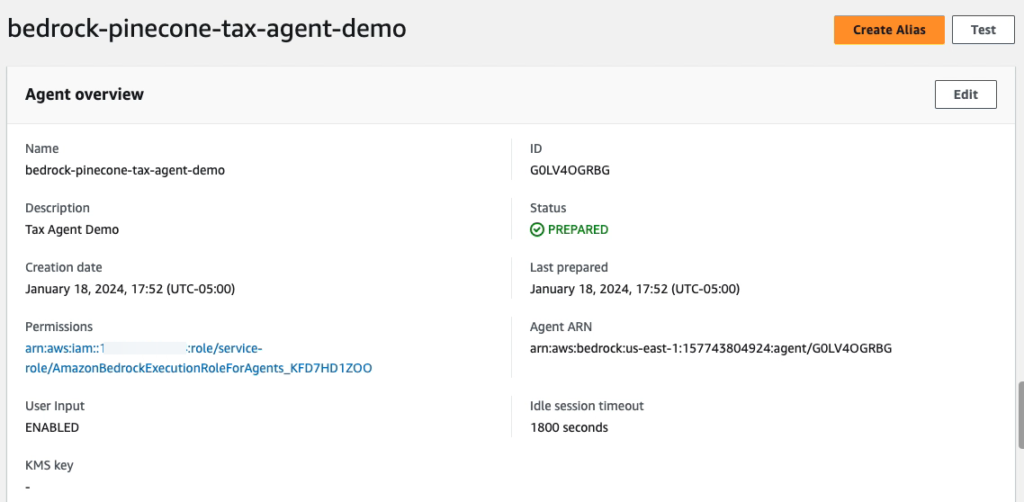

Setup Bedrock Agent

- Create an Agent to test the knowledge Base

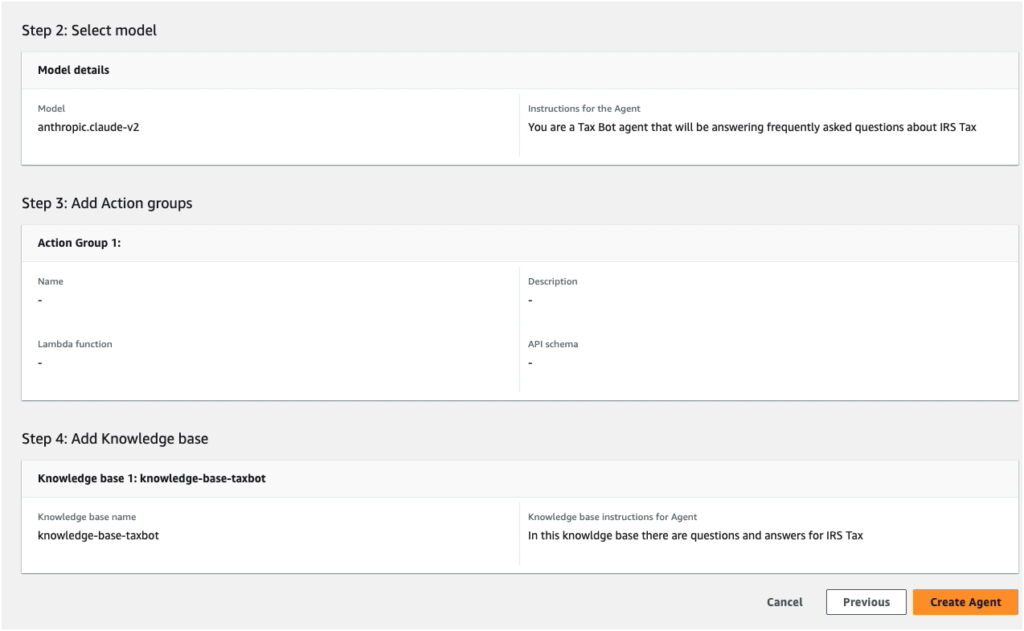

Select the LLM Provider and model

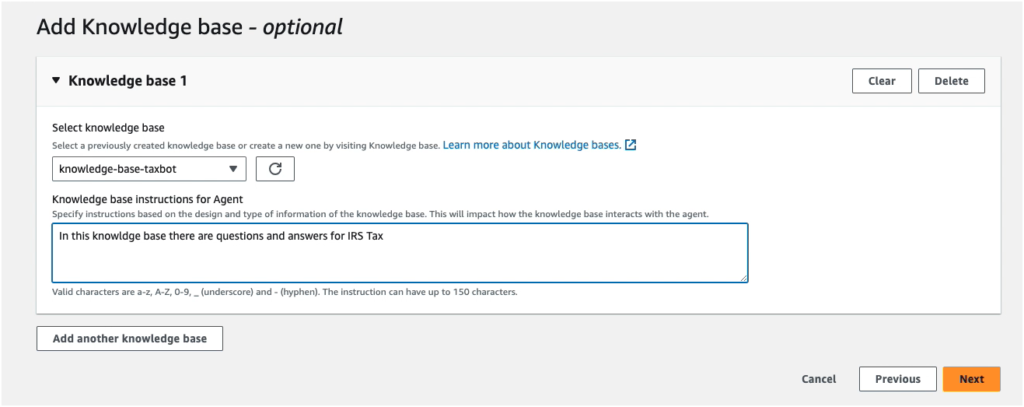

Select the knowledge base created previously

Review and Create the Bedrock agent.

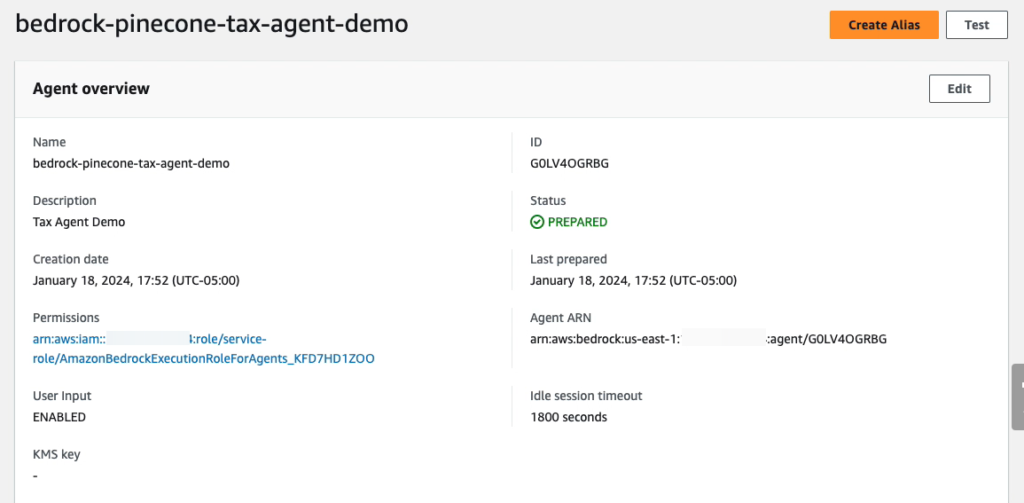

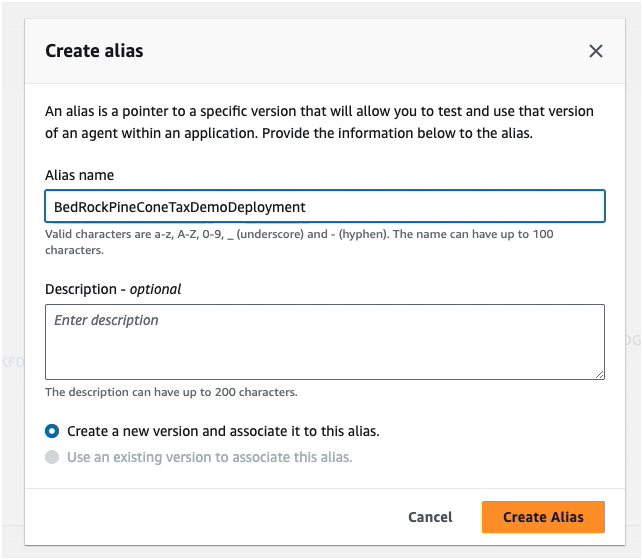

Create an Alias for the Bedrock Agent

- We need to create an alias to deploy the agent.

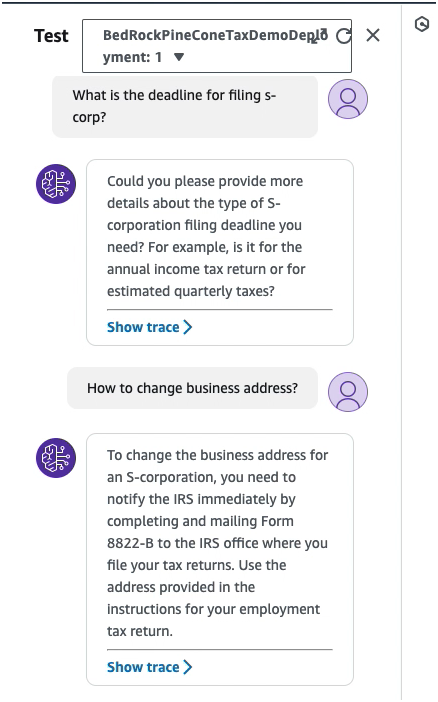

- Test using newly created Agent in the Playground

In this blog, we explored steps required to create Pinecone Knowledge Base for Amazon Bedrock and tested using Bedrock agent.

Note: Delete the resources if you have created related to this blog so that you are not being charged unnecessarily.